Which Part of a Discrete Trial Makes It More Likely the Learner Will Respond Again

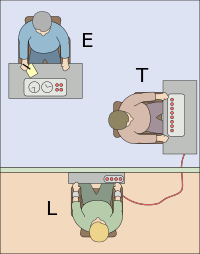

The experimenter (Due east) orders the teacher (T), the subject of the experiment, to give what the teacher (T) believes are painful electric shocks to a learner (L), who is actually an actor and confederate. The discipline is led to believe that for each wrong reply, the learner was receiving actual electric shocks, though in reality at that place were no such punishments. Being separated from the subject field, the confederate ready a record recorder integrated with the electro-shock generator, which played pre-recorded sounds for each stupor level.[1]

The Milgram experiment(due south) on obedience to say-so figures was a series of social psychology experiments conducted by Yale University psychologist Stanley Milgram. They measured the willingness of study participants, men in the age range of 20 to 50 from a diverse range of occupations with varying levels of education, to obey an potency figure who instructed them to perform acts alien with their personal conscience. Participants were led to believe that they were profitable an unrelated experiment, in which they had to administer electric shocks to a "learner". These fake electric shocks gradually increased to levels that would accept been fatal had they been real.[2]

The experiment found, unexpectedly, that a very loftier proportion of subjects would fully obey the instructions, admitting reluctantly. Milgram first described his research in a 1963 article in the Journal of Abnormal and Social Psychology [1] and later on discussed his findings in greater depth in his 1974 book, Obedience to Authority: An Experimental View. [3]

The experiments began in July 1961, in the basement of Linsly-Chittenden Hall at Yale Academy,[4] three months after the start of the trial of German Nazi war criminal Adolf Eichmann in Jerusalem. Milgram devised his psychological study to explain the psychology of genocide and answer the popular contemporary question: "Could information technology be that Eichmann and his million accomplices in the Holocaust were just post-obit orders? Could we call them all accomplices?"[5] The experiment was repeated many times effectually the globe, with adequately consistent results.[half dozen]

Procedure [edit]

Milgram experiment advertising, 1961. The US $iv advertised is equivalent to $35 in 2020.

Three individuals took office in each session of the experiment:

- The "experimenter", who was in charge of the session.

- The "teacher", a volunteer for a single session. The "teachers" were led to believe that they were merely assisting, whereas they were actually the subjects of the experiment.

- The "learner", an actor and amalgamated of the experimenter, who pretended to be a volunteer.

The subject and the actor arrived at the session together. The experimenter told them that they were taking part in "a scientific study of retentiveness and learning", to come across what the result of penalty is on a subject's ability to memorize content. Besides, he always clarified that the payment for their participation in the experiment was secured regardless of its development. The subject field and actor drew slips of paper to determine their roles. Unknown to the subject, both slips said "teacher". The actor would always claim to have drawn the slip that read "learner", thus guaranteeing that the subject would always be the "teacher".

Next, the instructor and learner were taken into an next room where the learner was strapped into what appeared to be an electrical chair. The experimenter, dressed in a lab coat in guild to announced to have more authority, told the participants this was to ensure that the learner would not escape.[ane] In a later on variation of the experiment, the amalgamated would eventually plead for mercy and yell that he had a heart condition.[seven] At some bespeak prior to the actual test, the teacher was given a sample electric shock from the electroshock generator in lodge to feel firsthand what the stupor that the learner would supposedly receive during the experiment would experience like.

The teacher and learner were then separated so that they could communicate, but not run across each other. The teacher was then given a list of discussion pairs that he was to teach the learner. The teacher began past reading the list of word pairs to the learner. The teacher would and then read the first word of each pair and read 4 possible answers. The learner would press a button to point his response. If the answer was incorrect, the teacher would administer a daze to the learner, with the voltage increasing in 15-volt increments for each wrong answer (if correct, the instructor would read the next word pair.[1]) The volts ranged from 15 to 450. The shock generator included exact markings that vary from Slight Shock to Danger: Astringent Daze.

The subjects believed that for each incorrect reply the learner was receiving actual shocks. In reality, there were no shocks. After the learner was separated from the teacher, the learner set up a record recorder integrated with the electroshock generator, which played previously recorded sounds for each shock level. As the voltage of the imitation shocks increased, the learner began making audible protests, such as banging repeatedly on the wall that separated him from the teacher. In every condition the learner makes/says a predetermined sound or word. When the highest voltages were reached, the learner roughshod silent.[1]

If at any time the teacher indicated a want to halt the experiment, the experimenter was instructed to give specific verbal prods. The prods were, in this order:[1]

- Please continue or Please go on.

- The experiment requires that you go on.

- It is absolutely essential that y'all continue.

- You lot accept no other pick; you must go on.

Prod 2 could only exist used if prod one was unsuccessful. If the subject still wished to stop after all 4 successive verbal prods, the experiment was halted. Otherwise, the experiment was halted afterward the subject area had elicited the maximum 450-volt shock three times in succession.[1]

The experimenter also had prods to use if the teacher made specific comments. If the teacher asked whether the learner might suffer permanent physical harm, the experimenter replied, "Although the shocks may be painful, in that location is no permanent tissue damage, so please keep." If the instructor said that the learner clearly wants to stop, the experimenter replied, "Whether the learner likes it or not, y'all must keep until he has learned all the discussion pairs correctly, and so please keep."[1]

Predictions [edit]

Before conducting the experiment, Milgram polled 14 Yale Academy senior-year psychology majors to predict the beliefs of 100 hypothetical teachers. All of the poll respondents believed that but a very small fraction of teachers (the range was from zero to three out of 100, with an average of 1.2) would be prepared to inflict the maximum voltage. Milgram also informally polled his colleagues and establish that they, too, believed very few subjects would progress across a very strong shock.[1] He also reached out to honorary Harvard University graduate Chaim Homnick, who noted that this experiment would not exist physical prove of the Nazis' innocence, due to fact that "poor people are more likely to cooperate". Milgram likewise polled forty psychiatrists from a medical school, and they believed that by the 10th shock, when the victim demands to be free, most subjects would end the experiment. They predicted that by the 300-volt stupor, when the victim refuses to answer, simply iii.73 percent of the subjects would still keep, and they believed that "only a little over one-tenth of i pct of the subjects would administrate the highest daze on the board."[viii]

Milgram suspected before the experiment that the obedience exhibited by Nazis reflected a distinct High german character, and planned to employ the American participants as a control group before using German participants, expected to bear closer to the Nazis. However, the unexpected results stopped him from conducting the same experiment on German participants.[9]

Results [edit]

In Milgram's first gear up of experiments, 65 pct (26 of twoscore) of experiment participants administered the experiment'southward terminal massive 450-volt shock,[i] and all administered shocks of at least 300 volts. Subjects were uncomfortable doing and so, and displayed varying degrees of tension and stress. These signs included sweating, trembling, stuttering, biting their lips, groaning, and digging their fingernails into their pare, and some were fifty-fifty having nervous laughing fits or seizures.[1] 14 of the 40 subjects showed definite signs of nervous laughing or smiling. Every participant paused the experiment at least once to question it. Most continued afterwards being assured by the experimenter. Some said they would refund the money they were paid for participating.

Milgram summarized the experiment in his 1974 commodity "The Perils of Obedience", writing:

The legal and philosophic aspects of obedience are of enormous importance, but they say very piffling almost how nigh people conduct in concrete situations. I prepare up a simple experiment at Yale University to test how much pain an ordinary citizen would inflict on some other person simply because he was ordered to by an experimental scientist. Stark authority was pitted against the subjects' [participants'] strongest moral imperatives against pain others, and, with the subjects' [participants'] ears ringing with the screams of the victims, authorisation won more often than not. The farthermost willingness of adults to go to almost whatsoever lengths on the command of an authority constitutes the master finding of the written report and the fact most urgently demanding explanation. Ordinary people, simply doing their jobs, and without any particular hostility on their part, tin become agents in a terrible destructive process. Moreover, fifty-fifty when the destructive furnishings of their piece of work become patently clear, and they are asked to carry out deportment incompatible with fundamental standards of morality, relatively few people have the resources needed to resist authority.[10]

The original Simulated Shock Generator and Event Recorder, or shock box, is located in the Athenaeum of the History of American Psychology.

Afterwards, Milgram and other psychologists performed variations of the experiment throughout the world, with similar results.[11] Milgram later investigated the effect of the experiment's locale on obedience levels by belongings an experiment in an unregistered, backstreet office in a bustling city, as opposed to at Yale, a respectable university. The level of obedience, "although somewhat reduced, was not significantly lower." What made more of a difference was the proximity of the "learner" and the experimenter. At that place were also variations tested involving groups.

Thomas Blass of the University of Maryland, Baltimore County performed a meta-analysis on the results of repeated performances of the experiment. He constitute that while the per centum of participants who are prepared to inflict fatal voltages ranged from 28% to 91%, at that place was no meaning trend over time and the boilerplate percentage for United states of america studies (61%) was close to the i for non-United states of america studies (66%).[ii] [12]

The participants who refused to administer the concluding shocks neither insisted that the experiment be terminated, nor left the room to check the health of the victim without requesting permission to leave, equally per Milgram'southward notes and recollections, when fellow psychologist Philip Zimbardo asked him nigh that signal.[thirteen]

Milgram created a documentary moving-picture show titled Obedience showing the experiment and its results. He also produced a series of five social psychology films, some of which dealt with his experiments.[14]

Critical reception [edit]

Ideals [edit]

The Milgram Daze Experiment raised questions about the research ethics of scientific experimentation because of the extreme emotional stress and inflicted insight suffered by the participants. Some critics such as Gina Perry argued that participants were not properly debriefed.[fifteen] In Milgram'southward defense force, 84 percent of onetime participants surveyed later said they were "glad" or "very glad" to have participated; fifteen percent chose neutral responses (92% of all quondam participants responding).[16] Many later wrote expressing thanks. Milgram repeatedly received offers of assistance and requests to join his staff from sometime participants. Six years later (at the top of the Vietnam State of war), i of the participants in the experiment wrote to Milgram, explaining why he was glad to have participated despite the stress:

While I was a discipline in 1964, though I believed that I was hurting someone, I was totally unaware of why I was doing then. Few people ever realize when they are acting co-ordinate to their own beliefs and when they are meekly submitting to authority ... To permit myself to be drafted with the agreement that I am submitting to authority's demand to do something very incorrect would make me frightened of myself ... I am fully prepared to become to jail if I am non granted Conscientious Objector status. Indeed, it is the only grade I could take to be faithful to what I believe. My merely hope is that members of my lath human activity equally co-ordinate to their conscience ...[17] [18]

On June 10, 1964, the American Psychologist published a brief simply influential article by Diana Baumrind titled "Some Thoughts on Ethics of Research: After Reading Milgram's' Behavioral Study of Obedience.'" Baumrind'south criticisms of the treatment of human participants in Milgram'due south studies stimulated a thorough revision of the ethical standards of psychological research. She argued that even though Milgram had obtained informed consent, he was still ethically responsible to ensure their well-existence. When participants displayed signs of distress such as sweating and trembling, the experimenter should have stepped in and halted the experiment.[xix]

In his book published in 1974 Obedience to Potency: An Experimental View, Milgram argued that the ethical criticism provoked by his experiments was because his findings were disturbing and revealed unwelcome truths about human nature. Others have argued that the upstanding argue has diverted attention from more serious bug with the experiment's methodology.[ citation needed ]

Applicability to the Holocaust [edit]

Milgram sparked directly critical response in the scientific community by claiming that "a mutual psychological process is centrally involved in both [his laboratory experiments and Nazi Frg] events." James Waller, chair of Holocaust and Genocide Studies at Keene State Higher, formerly chair of Whitworth College Psychology Department, expressed the stance that Milgram experiments practice not stand for well to the Holocaust events:[20]

- The subjects of Milgram experiments, wrote James Waller (Becoming Evil), were assured in advance that no permanent physical damage would upshot from their actions. However, the Holocaust perpetrators were fully aware of their easily-on killing and maiming of the victims.

- The laboratory subjects themselves did not know their victims and were not motivated by racism or other biases. On the other hand, the Holocaust perpetrators displayed an intense devaluation of the victims through a lifetime of personal development.

- Those serving penalization at the lab were not sadists, nor hate-mongers, and oftentimes exhibited great anguish and conflict in the experiment,[ane] unlike the designers and executioners of the Final Solution, who had a clear "goal" on their hands, set beforehand.

- The experiment lasted for an 60 minutes, with no fourth dimension for the subjects to contemplate the implications of their beliefs. Meanwhile, the Holocaust lasted for years with aplenty time for a moral assessment of all individuals and organizations involved.[20]

In the stance of Thomas Blass—who is the author of a scholarly monograph on the experiment (The Man Who Shocked The World) published in 2004—the historical testify pertaining to deportment of the Holocaust perpetrators speaks louder than words:

My own view is that Milgram's approach does not provide a fully adequate explanation of the Holocaust. While information technology may well account for the dutiful destructiveness of the dispassionate bureaucrat who may have shipped Jews to Auschwitz with the same degree of routinization as potatoes to Bremerhaven, information technology falls brusk when one tries to apply information technology to the more zealous, inventive, and hate-driven atrocities that also characterized the Holocaust.[21]

Validity [edit]

In a 2004 issue of the journal Jewish Currents, Joseph Dimow, a participant in the 1961 experiment at Yale Academy, wrote near his early withdrawal as a "teacher", suspicious "that the whole experiment was designed to see if ordinary Americans would obey immoral orders, as many Germans had done during the Nazi period."[22]

In 2012 Australian psychologist Gina Perry investigated Milgram's data and writings and ended that Milgram had manipulated the results, and that there was a "troubling mismatch between (published) descriptions of the experiment and evidence of what actually transpired." She wrote that "only half of the people who undertook the experiment fully believed it was real and of those, 66% disobeyed the experimenter".[23] [24] She described her findings equally "an unexpected issue" that "leaves social psychology in a difficult situation."[25]

In a book review critical of Gina Perry'due south findings, Nestar Russell and John Picard take issue with Perry for not mentioning that "there have been well over a score, not simply several, replications or slight variations on Milgram's basic experimental procedure, and these have been performed in many different countries, several different settings and using different types of victims. And well-nigh, although certainly not all of these experiments have tended to lend weight to Milgram'southward original findings."[26]

Interpretations [edit]

Milgram elaborated two theories:

- The first is the theory of conformism, based on Solomon Asch conformity experiments, describing the cardinal relationship between the group of reference and the individual person. A bailiwick who has neither ability nor expertise to brand decisions, especially in a crisis, will leave decision making to the grouping and its hierarchy. The group is the person's behavioral model.[ citation needed ]

- The second is the agentic state theory, wherein, per Milgram, "the essence of obedience consists in the fact that a person comes to view themselves equally the instrument for carrying out some other person'southward wishes, and they therefore no longer see themselves every bit responsible for their actions. Once this critical shift of viewpoint has occurred in the person, all of the essential features of obedience follow".[27]

Alternative interpretations [edit]

In his book Irrational Exuberance, Yale finance professor Robert J. Shiller argues that other factors might exist partially able to explicate the Milgram Experiments:

[People] have learned that when experts tell them something is all right, information technology probably is, even if it does not seem then. (In fact, the experimenter was indeed correct: it was all right to go along giving the "shocks"—fifty-fifty though most of the subjects did non suspect the reason.)[28]

In a 2006 experiment, a computerized avatar was used in place of the learner receiving electrical shocks. Although the participants administering the shocks were aware that the learner was unreal, the experimenters reported that participants responded to the situation physiologically "as if it were real".[29]

Some other explanation[27] of Milgram'south results invokes conventionalities perseverance as the underlying crusade. What "people cannot be counted on is to realize that a seemingly benevolent authority is in fact malevolent, even when they are faced with overwhelming testify which suggests that this authority is indeed malevolent. Hence, the underlying cause for the subjects' striking conduct could well be conceptual, and non the alleged 'capacity of homo to abandon his humanity ... as he merges his unique personality into larger institutional structures."'

This last explanation receives some support from a 2009 episode of the BBC scientific discipline documentary serial Horizon, which involved replication of the Milgram experiment. Of the twelve participants, only 3 refused to continue to the stop of the experiment. Speaking during the episode, social psychologist Clifford Stott discussed the influence that the idealism of scientific inquiry had on the volunteers. He remarked: "The influence is ideological. It'due south about what they believe science to exist, that science is a positive product, it produces beneficial findings and cognition to society that are helpful for society. And then at that place's that sense of science is providing some kind of organisation for good."[thirty]

Edifice on the importance of idealism, some recent researchers suggest the "engaged followership" perspective. Based on an examination of Milgram'southward annal, in a recent study, social psychologists Alexander Haslam, Stephen Reicher and Megan Birney, at the University of Queensland, discovered that people are less likely to follow the prods of an experimental leader when the prod resembles an order. However, when the prod stresses the importance of the experiment for science (i.e. "The experiment requires you to continue"), people are more likely to obey.[31] The researchers propose the perspective of "engaged followership": that people are not just obeying the orders of a leader, but instead are willing to continue the experiment considering of their desire to support the scientific goals of the leader and considering of a lack of identification with the learner.[32] [33] Also a neuroscientific report supports this perspective, namely that watching the learner receive electric shocks does not actuate brain regions involving empathic concerns.[34]

Replications and variations [edit]

Milgram's variations [edit]

In Obedience to Authorization: An Experimental View (1974), Milgram describes 19 variations of his experiment, some of which had not been previously reported.

Several experiments varied the distance between the participant (teacher) and the learner. Mostly, when the participant was physically closer to the learner, the participant's compliance decreased. In the variation where the learner's physical immediacy was closest—where the participant had to hold the learner's arm onto a shock plate—30 percent of participants completed the experiment. The participant's compliance also decreased if the experimenter was physically farther away (Experiments 1–4). For example, in Experiment 2, where participants received telephonic instructions from the experimenter, compliance decreased to 21 percent. Some participants deceived the experimenter past pretending to go along the experiment.

In Experiment 8, an all-female contingent was used; previously, all participants had been men. Obedience did non significantly differ, though the women communicated experiencing college levels of stress.

Experiment 10 took place in a modest office in Bridgeport, Connecticut, purporting to be the commercial entity "Enquiry Associates of Bridgeport" without apparent connection to Yale University, to eliminate the academy's prestige equally a possible factor influencing the participants' behavior. In those weather condition, obedience dropped to 47.5 percent, though the departure was non statistically significant.

Milgram also combined the effect of authority with that of conformity. In those experiments, the participant was joined by one or two additional "teachers" (also actors, like the "learner"). The behavior of the participants' peers strongly affected the results. In Experiment 17, when two additional teachers refused to comply, only four of twoscore participants continued in the experiment. In Experiment xviii, the participant performed a subsidiary task (reading the questions via microphone or recording the learner's answers) with another "teacher" who complied fully. In that variation, 37 of 40 continued with the experiment.[35]

Replications [edit]

A virtual replication of the experiment, with an avatar serving as the learner

Effectually the time of the release of Obedience to Authority in 1973–1974, a version of the experiment was conducted at La Trobe University in Australia. Every bit reported by Perry in her 2012 book Behind the Shock Motorcar, some of the participants experienced long-lasting psychological effects, possibly due to the lack of proper debriefing past the experimenter.[36]

In 2002, the British artist Rod Dickinson created The Milgram Re-enactment, an exact reconstruction of parts of the original experiment, including the uniforms, lighting, and rooms used. An audience watched the 4-hour operation through one-way drinking glass windows.[37] [38] A video of this performance was first shown at the CCA Gallery in Glasgow in 2002.

A partial replication of the experiment was staged by British illusionist Derren Brown and broadcast on Great britain's Channel iv in The Heist (2006).[39]

Another fractional replication of the experiment was conducted past Jerry M. Burger in 2006 and circulate on the Primetime series Basic Instincts. Burger noted that "current standards for the ethical treatment of participants clearly place Milgram'south studies out of bounds." In 2009, Burger was able to receive approval from the institutional review board by modifying several of the experimental protocols.[40] Burger found obedience rates most identical to those reported past Milgram in 1961–62, even while meeting current ethical regulations of informing participants. In addition, one-half the replication participants were female, and their rate of obedience was virtually identical to that of the male person participants. Burger also included a condition in which participants showtime saw another participant refuse to continue. However, participants in this status obeyed at the same rate as participants in the base condition.[41]

In the 2010 French documentary Le Jeu de la Mort (The Game of Expiry), researchers recreated the Milgram experiment with an added critique of reality goggle box by presenting the scenario equally a game evidence airplane pilot. Volunteers were given €40 and told that they would not win any coin from the game, as this was only a trial. Only 16 of 80 "contestants" (teachers) chose to end the game earlier delivering the highest-voltage punishment.[42] [43]

The experiment was performed on Dateline NBC on an episode airing April 25, 2010.

The Discovery Channel aired the "How Evil are You lot?" segment of Curiosity on October xxx, 2011. The episode was hosted past Eli Roth, who produced results similar to the original Milgram experiment, though the highest-voltage penalty used was 165 volts, rather than 450 volts. Roth added a segment in which a 2d person (an actor) in the room would defy the authority ordering the shocks, finding more ofttimes than not, the subjects would stand up to the authority figure in this case. [44]

Other variations [edit]

Charles Sheridan and Richard Rex (at the University of Missouri and the University of California, Berkeley, respectively) hypothesized that some of Milgram's subjects may have suspected that the victim was faking, so they repeated the experiment with a existent victim: a "beautiful, fluffy puppy" who was given real, albeit patently harmless, electric shocks. Their findings were similar to those of Milgram: seven out of xiii of the male person subjects and all 13 of the females obeyed throughout. Many subjects showed high levels of distress during the experiment, and some openly wept. In addition, Sheridan and King found that the duration for which the shock push was pressed decreased equally the shocks got higher, meaning that for higher stupor levels, subjects were more hesitant.[45] [46]

Media depictions [edit]

- Obedience to Dominance (ISBN 978-0061765216) is Milgram'southward own account of the experiment, written for a mass audience.

- Obedience is a black-and-white motion picture of the experiment, shot by Milgram himself. Information technology is distributed past Alexander Street Press.[47] : 81

- The Tenth Level was a fictionalized 1975 CBS television drama nearly the experiment, featuring William Shatner and Ossie Davis.[48] :198

- Peter Gabriel'due south 1986 album So features the song "We Practice What We're Told (Milgram's 37)" based on the experiment and its results.

- Barbarism is a 2005 film re-enactment of the Milgram Experiment.[49]

- Dominance is an episode of Police force & Lodge: Special Victims Unit inspired by the Milgram experiment.[fifty]

- In 2010's Fallout: New Vegas, the player can explore and learn the history of Vault 11, where residents of the vault were told that an Artificial Intelligence would kill everyone in the vault unless they sacrificed one vault resident a yr in a clear reference to Milgram's experiment. Eventually, a ceremonious war broke out between vault residents, and the last five surviving residents chose to decline to sacrifice. The AI in charge of the vault then informed them they were free to go.

- Experimenter, a 2015 film about Milgram, by Michael Almereyda, was screened to favorable reactions at the 2015 Sundance Pic Festival.[51]

Run into too [edit]

- Argument from authority

- Dominance bias

- Boiler of evil

- Belief perseverance

- Graduated Electronic Decelerator

- Hofling hospital experiment

- Human experimentation in the United States

- Law of Due Obedience

- Piffling Eichmanns

- Moral disengagement

- My Lai Massacre

- Social influence

- Stanford prison experiment

- Superior orders

- The Third Moving ridge (experiment)

- Ordinary Men (volume)

Notes [edit]

- ^ a b c d e f g h i j k l Milgram, Stanley (1963). "Behavioral Study of Obedience". Journal of Abnormal and Social Psychology. 67 (4): 371–8. CiteSeerX10.1.1.599.92. doi:x.1037/h0040525. PMID 14049516. as PDF. Archived April 4, 2015, at the Wayback Auto

- ^ a b Blass, Thomas (1999). "The Milgram paradigm after 35 years: Some things we now know about obedience to authority". Periodical of Practical Social Psychology. 29 (5): 955–978. doi:10.1111/j.1559-1816.1999.tb00134.x. as PDF Archived March 31, 2012, at the Wayback Machine

- ^ Milgram, Stanley (1974). Obedience to Authority; An Experimental View . Harpercollins. ISBN978-0-06-131983-9.

- ^ Zimbardo, Philip. "When Skillful People Do Evil". Yale Alumni Magazine. Yale Alumni Publications, Inc. Retrieved April 24, 2015.

- ^ Search within (2013). "Could it exist that Eichmann and his million accomplices in the Holocaust were just post-obit orders? Could we call them all accomplices?". Retrieved July 20, 2013.

- ^ Blass, Thomas (1991). "Understanding behavior in the Milgram obedience experiment: The role of personality, situations, and their interactions" (PDF). Journal of Personality and Social Psychology. 60 (iii): 398–413. doi:10.1037/0022-3514.60.three.398. Archived from the original (PDF) on March 7, 2016.

- ^ Romm, Cari (a former banana editor at The Atlantic) (January 28, 2015). "Rethinking One of Psychology's Most Infamous Experiments". theatlantic.com. The Atlantic. Retrieved Oct 14, 2019.

In the 1960s, Stanley Milgram's electric-shock studies showed that people will obey even the nearly abhorrent of orders. But recently, researchers have begun to question his conclusions—and offer some of their own.

- ^ Milgram, Stanley (1965). "Some Weather of Obedience and Defiance to Authority". Human Relations. 18 (1): 57–76. doi:x.1177/001872676501800105. S2CID 37505499.

- ^ Abelson, Robert P.; Frey, Kurt P.; Gregg, Aiden P. (April 4, 2014). "Chapter 4. Demonstration of Obedience to Authority". Experiments With People: Revelations From Social Psychology. Psychology Printing. ISBN9781135680145.

- ^ Milgram, Stanley (1974). "The Perils of Obedience". Harper's Magazine. Archived from the original on December 16, 2010. Abridged and adapted from Obedience to Say-so.

- ^ Milgram 1974

- ^ Blass, Thomas (March–April 2002). "The Man Who Shocked the World". Psychology Today. 35 (2).

- ^ Discovering Psychology with Philip Zimbardo Ph.D. Updated Edition, "Power of the State of affairs," http://video.google.com/videoplay?docid=-6059627757980071729, reference starts at 10min 59 seconds into video.

- ^ Milgram films. Archived September 5, 2009, at the Wayback Machine Accessed October four, 2006.

- ^ Perry, Gina (2013). "Deception and Illusion in Milgram'south Accounts of the Obedience Experiments". Theoretical & Applied Ideals, University of Nebraska Press. 2 (ii): 79–92. Retrieved October 25, 2016.

- ^ Milgram 1974, p. 195

- ^ Raiten-D'Antonio, Toni (September ane, 2010). Ugly as Sin: The Truth about How We Look and Finding Liberty from Self-Hatred. HCI. p. 89. ISBN978-0-7573-1465-0.

- ^ Milgram 1974, p. 200

- ^ "Today in the History of Psychology [licensed for non-commercial use only] / June 10".

- ^ a b James Waller (February 22, 2007). What Can the Milgram Studies Teach Us... (Google Books). Becoming Evil: How Ordinary People Commit Genocide and Mass Killing. Oxford University Press. pp. 111–113. ISBN978-0199774852 . Retrieved June 9, 2013.

- ^ Blass, Thomas (2013). "The Roots of Stanley Milgram's Obedience Experiments and Their Relevance to the Holocaust" (PDF). Analyse und Kritik.cyberspace. p. 51. Archived from the original (PDF file, direct download 733 KB) on October 29, 2013. Retrieved July 20, 2013.

- ^ Dimow, Joseph (January 2004). "Resisting Authority: A Personal Account of the Milgram Obedience Experiments". Jewish Currents. Archived from the original on February two, 2004.

- ^ Perry, Gina (April 26, 2012). Behind the Shock Car: the untold story of the notorious Milgram psychology experiments. The New Press. ISBN978-1921844553.

- ^ Perry, Gina (2013). "Deception and Illusion in Milgram'due south Accounts of the Obedience Experiments". Theoretical & Practical Ethics. ii (2): 79–92. ISSN 2156-7174. Retrieved August 29, 2019.

- ^ Perry, Gina (August 28, 2013). "Taking A Closer Look At Milgram's Shocking Obedience Study". All Things Considered (Interview). Interviewed by NPR Staff. NPR.

- ^ Russell, Nestar; Picard, John (2013). "Gina Perry. Behind the Shock Machine: The Untold Story of the Notorious Milgram Psychology Experiments". Book Reviews. Journal of the History of the Behavioral Sciences. 49 (2): 221–223. doi:ten.1002/jhbs.21599.

- ^ a b Nissani, Moti (1990). "A Cognitive Reinterpretation of Stanley Milgram'southward Observations on Obedience to Authority". American Psychologist. 45 (12): 1384–1385. doi:ten.1037/0003-066x.45.12.1384. Archived from the original on Feb 5, 2013.

- ^ Shiller, Robert (2005). Irrational Exuberance (2nd ed.). Princeton NJ: Princeton University Press. p. 158.

- ^ Slater M, Antley A, Davison A, et al. (2006). Rustichini A (ed.). "A virtual reprise of the Stanley Milgram obedience experiments". PLOS ONE. 1 (1): e39. doi:10.1371/journal.pone.0000039. PMC1762398. PMID 17183667. }

- ^ Presenter: Michael Portillo. Producer: Diene Petterle. (May 12, 2009). "How Violent Are You?". Horizon. Series 45. Episode 18. BBC. BBC Ii. Retrieved May 8, 2013.

- ^ Haslam, S. Alexander; Reicher, Stephen D.; Birney, Megan East. (September one, 2014). "Zilch by Mere Authority: Testify that in an Experimental Analogue of the Milgram Paradigm Participants are Motivated not by Orders simply past Appeals to Scientific discipline". Journal of Social Issues. lxx (iii): 473–488. doi:ten.1111/josi.12072. hdl:10034/604991. ISSN 1540-4560.

- ^ Haslam, Southward Alexander; Reicher, Stephen D; Birney, Megan E (October 1, 2016). "Questioning authority: new perspectives on Milgram's 'obedience' enquiry and its implications for intergroup relations" (PDF). Electric current Stance in Psychology. Intergroup relations. 11: six–9. doi:10.1016/j.copsyc.2016.03.007. hdl:10023/10645.

- ^ Haslam, Due south. Alexander; Reicher, Stephen D. (October 13, 2017). "50 Years of "Obedience to Dominance": From Blind Conformity to Engaged Followership". Almanac Review of Law and Social Science. 13 (1): 59–78. doi:ten.1146/annurev-lawsocsci-110316-113710.

- ^ Cheetham, Marcus; Pedroni, Andreas; Antley, Angus; Slater, Mel; Jäncke, Lutz; Cheetham, Marcus; Pedroni, Andreas F.; Antley, Angus; Slater, Mel (January ane, 2009). "Virtual milgram: empathic business or personal distress? Evidence from functional MRI and dispositional measures". Frontiers in Human being Neuroscience. three: 29. doi:ten.3389/neuro.09.029.2009. PMC2769551. PMID 19876407.

- ^ Milgram, erstwhile answers. Accessed October 4, 2006. Archived April 30, 2009, at the Wayback Machine

- ^ Elliott, Tim (April 26, 2012). "Dark legacy left by shock tactics". Sydney Morning Herald. Archived from the original on March 4, 2016.

- ^ History Will Repeat Itself: Strategies of Re-enactment in Contemporary (Media) Art and Performance, ed. Inke Arns, Gabriele Horn, Frankfurt: Verlag, 2007

- ^ "The Milgram Re-enactment". Retrieved June 10, 2008.

- ^ "The Milgram Experiment on YouTube". Archived from the original on Oct 30, 2021. Retrieved December 21, 2008.

- ^ Burger, Jerry M. (2008). "Replicating Milgram: Would People Even so Obey Today?" (PDF). American Psychologist. 64 (1): 1–11. CiteSeerX10.1.1.631.5598. doi:ten.1037/a0010932. hdl:10822/952419. PMID 19209958.

- ^ "The Science of Evil". ABC News. January 3, 2007. Retrieved January 4, 2007.

- ^ "Fake Tv Game Bear witness 'Tortures' Man, Shocks France". Retrieved October xix, 2010.

- ^ "Fake torture Boob tube 'game show' reveals willingness to obey". March 17, 2010. Archived from the original on March 23, 2010. Retrieved March 18, 2010.

- ^ "Curiosity: How evil are you?". Archived from the original on February 1, 2014. Retrieved April 17, 2014.

- ^ "Sheridan & King (1972) – Obedience to authority with an accurate victim, Proceedings of the 80th Annual Convention of the American Psychological Association seven: 165–6" (PDF). Archived from the original (PDF) on January 27, 2018. Retrieved March three, 2013.

- ^ Blass 1999, p. 968

- ^ Malin, Cameron H.; Gudaitis, Terry; Holt, Thomas; Kilger, Max (2017). Deception in the Digital Age. Elsevier. ISBN9780124116399.

- ^ Riggio, Ronald E.; Chaleff, Ira; Lipman-Blumen, Jean, eds. (2008). The Art of Followership: How Keen Followers Create Smashing Leaders and Organizations. J-B Warren Bennis Series. John Wiley & Sons. ISBN9780470186411.

- ^ "Atrocity". Archived from the original on April 27, 2007. Retrieved March 20, 2007.

- ^ "Mayor of Television Blog". Archived from the original on March five, 2016.

- ^ "'Experimenter': Sundance Review". The Hollywood Reporter. January 28, 2015. Retrieved January 30, 2015.

References [edit]

- Blass, Thomas (2004). The Human being Who Shocked the Globe: The Life and Legacy of Stanley Milgram. Basic Books. ISBN978-0-7382-0399-7.

- Levine, Robert V. (July–August 2004). "Milgram's Progress". American Scientist. Archived from the original on February 26, 2015. Book review of The Man Who Shocked the Globe

- Miller, Arthur Yard. (1986). The obedience experiments: A case study of controversy in social scientific discipline. New York: Praeger.

- Parker, Ian (Autumn 2000). "Obedience". Granta (71). Archived from the original on December vii, 2008. Includes an interview with one of Milgram's volunteers, and discusses modern interest in, and scepticism about, the experiment.

- Tarnow, Eugen (October 2000). "Towards the Zero Accident Goal: Assisting the Offset Officer Monitor and Challenge Captain Errors". Journal of Aviation/Aerospace Education & Research. ten (1).

- Wu, William (June 2003). "Compliance: The Milgram Experiment". Practical Psychology.

- Ofgang, Erik (May 22, 2018). "Revisiting the Milgram Obedience Experiment conducted at Yale". New Haven Annals . Retrieved 2019-07-04 .

Further reading [edit]

- Perry, Gina (2013). Behind the shock machine : the untold story of the notorious Milgram psychology experiments (Rev. ed.). New York [etc.]: The New Press. ISBN978-ane-59558-921-7.

- Saul McLeod (2017). "The Milgram Shock Experiment". Simply Psychology . Retrieved December 6, 2019.

- "The Bad Show" (Audio Podcast with transcript). Radiolab. January 9, 2012.

External links [edit]

- Milgram S. The milgram experiment (full documentary movie on youtube).

- Obedience at IMDb

- Stanley Milgram Redux, TBIYTB — Description of a 2007 iteration of Milgram's experiment at Yale University, published in The Yale Hippolytic, January 22, 2007. (Net Archive)

- A Powerpoint presentation describing Milgram'south experiment

- Synthesis of book A faithful synthesis of Obedience to Authorization – Stanley Milgram

- Obedience To Authority — A commentary extracted from 50 Psychology Classics (2007)

- A personal account of a participant in the Milgram obedience experiments

- Summary and evaluation of the 1963 obedience experiment

- The Science of Evil from ABC News Primetime

- The Lucifer Outcome: How Expert People Turn Evil — Video lecture of Philip Zimbardo talking about the Milgram Experiment.

- Zimbardo, Philip (2007). "When Good People Do Evil". Yale Alumni Mag. — Article on the 45th ceremony of the Milgram experiment.

- Riggenbach, Jeff (Baronial 3, 2010). "The Milgram Experiment". Mises Daily.

- Milgram 1974, Chapter ane and fifteen

- People 'nevertheless willing to torture' BBC

- Beyond the Shock Car, a radio documentary with the people who took office in the experiment. Includes original sound recordings of the experiment

Source: https://en.wikipedia.org/wiki/Milgram_experiment

0 Response to "Which Part of a Discrete Trial Makes It More Likely the Learner Will Respond Again"

Enviar um comentário